Demystifying QEMU, KVM, and Proxmox (and ZFS)

I’ve been running Proxmox VE in my home lab for a long time. It’s reliable, flexible, and powerful—and like many users, I installed it on bare metal and jumped straight into spinning up virtual machines.

But recently, I ran into something that made me realize just how much I had taken for granted: snapshots.

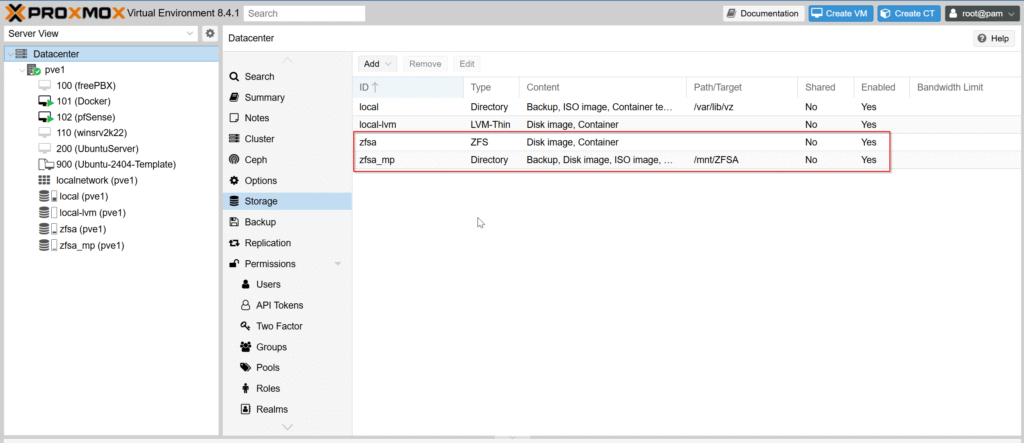

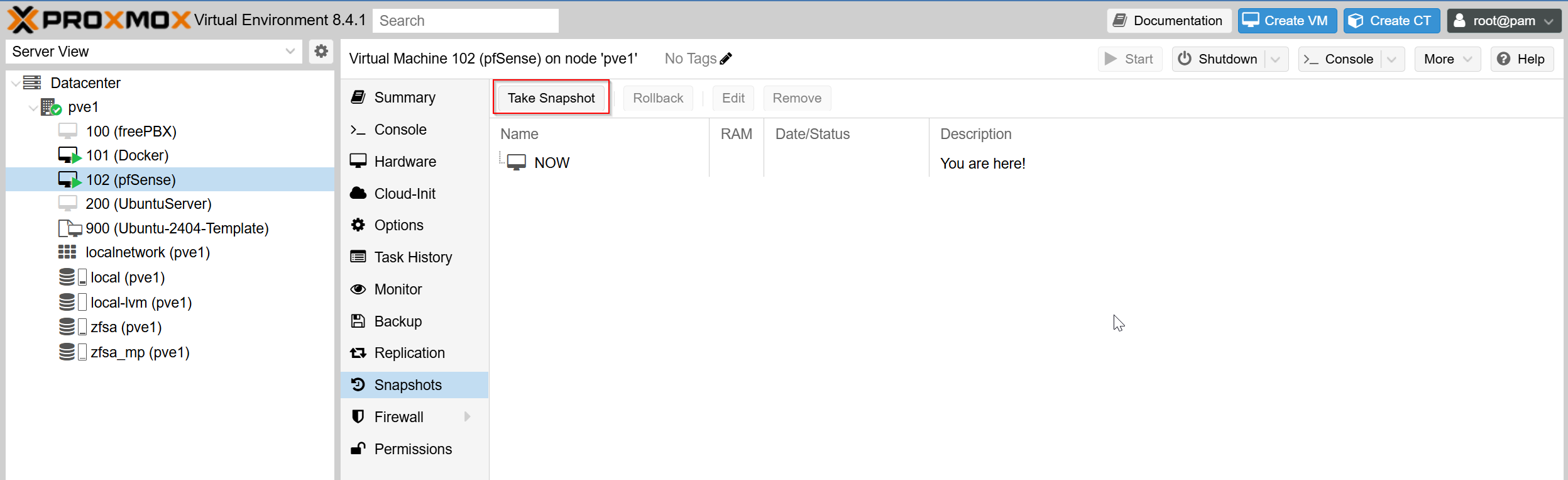

Specifically, I was reviewing my storage setup and clicked “Take Snapshot” on one of my VMs. I had set up a ZFS pool called zfsa, along with a ZFS-backed directory storage (zfsa_mp):

I assumed that Proxmox was triggering a ZFS snapshot. But the more I looked, the less certain I became. Was it actually a ZFS snapshot? Or something else entirely?

This seemingly simple question led me down a much deeper path of trying to understand what’s really happening under the hood in Proxmox. That’s when I began to unravel the roles of QEMU and KVM, and how they relate to each other and to Proxmox itself.

If you’ve been using Proxmox without fully understanding what’s powering it under the surface, this post is for you.

Table of Contents

ZFS Snapshots or Something Else?

Let’s start with the question that triggered all of this: What kind of snapshot is created when I click “Take Snapshot” in the Proxmox UI?

It turns out, the answer depends entirely on where your VM’s disk is stored:

- If your VM disk is stored in a ZFS dataset, Proxmox uses native ZFS snapshot capabilities.

- If the disk is stored elsewhere (e.g., a directory or LVM), the snapshot will be handled by QEMU, not ZFS.

In my case, even though I had a ZFS-backed storage pool, the VM in question was not using a ZFS volume for its disk. That meant the snapshot I created was a QEMU internal snapshot, not a ZFS one.

That realization raised even more questions:

- What exactly is a QEMU snapshot?

- Where does QEMU fit into all this?

- Where does KVM come in?

What started as a simple snapshot mystery became a deep dive into Proxmox’s virtualization stack.

Misconception: “Is Proxmox the Hypervisor?”

Like a lot of people getting into virtualization, I thought of Proxmox as the hypervisor. After all, it’s what I installed on bare metal, and it’s what I use to create, manage, and monitor my VMs.

To understand what’s really powering your VMs, let’s explore the two key components underneath Proxmox: QEMU and KVM.

What Is QEMU?

QEMU (Quick Emulator) is a userspace application that emulates hardware—CPUs, NICs, disk controllers, etc. By itself, it’s slow because it has to emulate everything in software. But when paired with KVM, it hands off the CPU and memory virtualization to KVM for better performance.

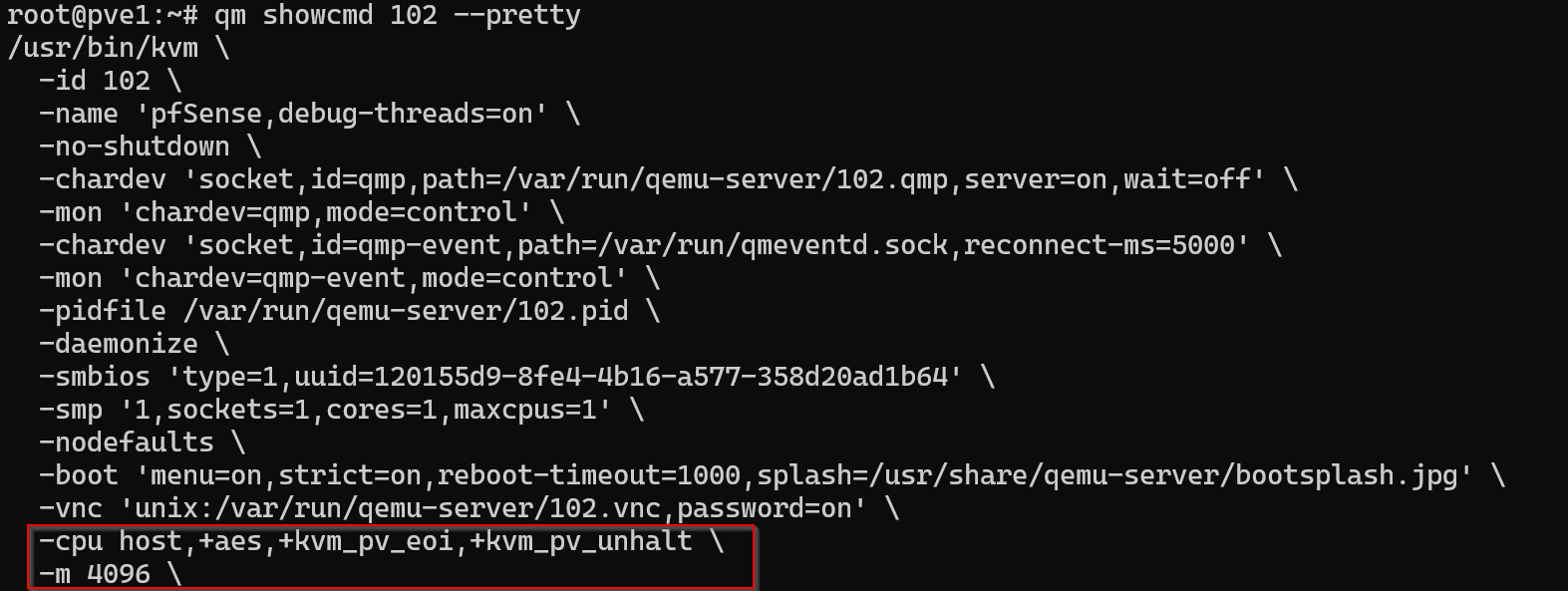

To see what QEMU is doing for a given VM, you can run:

qm showcmd 102 --pretty

Here is a sample of the output highlighting CPU and memory:

What Is KVM?

KVM stands for Kernel-based Virtual Machine. It’s a Linux kernel module that turns the Linux kernel into a Type 1 hypervisor. It allows virtual machines to run with hardware acceleration using Intel VT-x or AMD-V extensions.

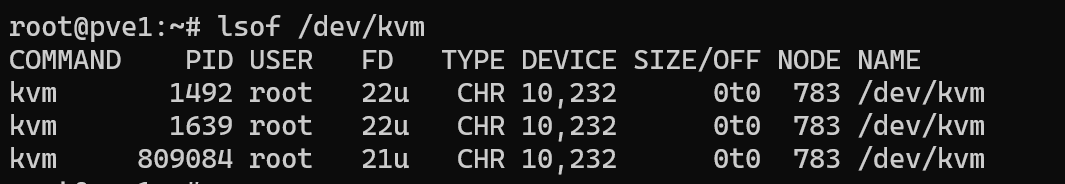

When KVM is installed on a Linux system like a Proxmox host, there is a file called /dev/kvm on the file system. This special file is an interface used by QEMU to hand off hardware virtualization tasks like hardware acceleration to the kernel.

To confirm that virtual machines are accessing /dev/kvm, we can print a list of open files to the terminal and filter for the file using this command:

lsof /dev/kvm

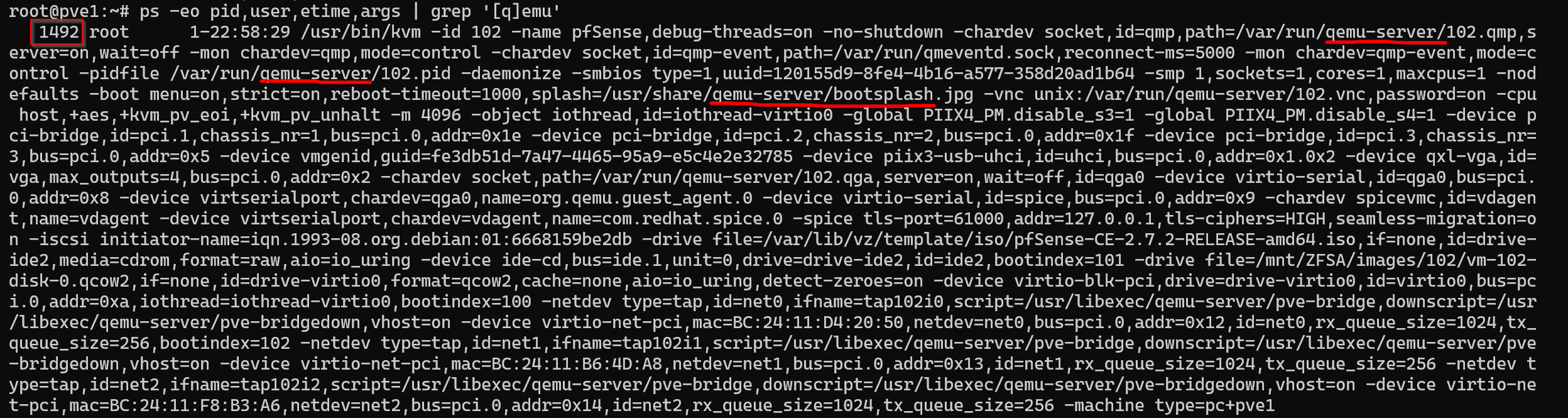

As you can see in the screenshot, three processes are using /dev/kvm. These processes are instances of QEMU, which is utilizing KVM for hardware-accelerated virtualization. To confirm this, I printed a list of processes to the terminal and filtered for qemu:

ps -eo pid,user,etime,args | grep '[q]emu'

When KVM is used, it uses a lot of arguments so the output is not quite clean but we can make out from the sample below that process ID 1492 is a running virtual machine. This virtual machine is assigned ID 102 and this is the VM ID assigned to my pfsense firewall.

So Where Does Proxmox Fit?

Proxmox VE is a Debian-based management platform for virtualization. It ties everything together:

- Web UI for managing VMs and containers

- CLI tools like qm for automation

- Features like backups, clustering, and HA

When you create a VM in Proxmox, it builds a command using qemu-system-x86_64 based on the VM’s config file at:

/etc/pve/qemu-server/<vmid>.conf

Under the hood, Proxmox wraps QEMU + KVM. When you create a VM in the Proxmox UI, it generates the correct qemu-system command and passes it off to the system, which runs it using KVM acceleration.

When you start a VM, Proxmox builds a QEMU command in the background based on your VM’s config (stored in “/etc/pve/qemu-server/”). It then runs “qemu-system-x86_64” with a bunch of flags for disk, RAM, CPU, network, and more.

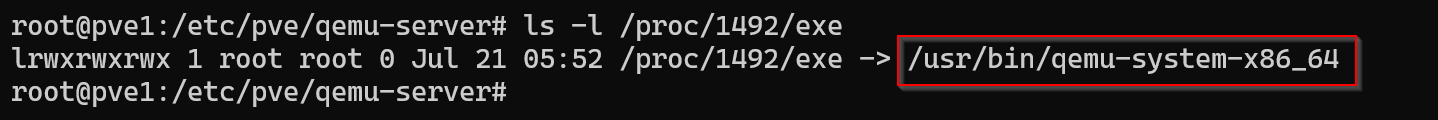

To confirm which binary is being executed for a running VM, we can inspect the symbolic link for its process using:

ls -l /proc/1492/exe

You don’t see QEMU directly unless you check the actual command being run or dig into the process tree.

Summary: What I Learned

- KVM is the real hypervisor, providing hardware virtualization through the kernel.

- QEMU is the userspace manager that emulates hardware and controls the VM lifecycle.

- Proxmox is a powerful front-end and orchestration layer that wraps QEMU + KVM.

- The snapshot type you get depends on your storage backend—ZFS or not.

- You don’t see QEMU directly in the Proxmox UI, but it’s doing all the heavy lifting behind the scenes.

Closing Thoughts

This experience reminded me that tools can become so familiar we stop questioning how they actually work. Proxmox is incredibly polished and user-friendly, but under the hood it’s still powered by Linux fundamentals: QEMU for emulation, KVM for performance, and ZFS (when configured properly) for storage magic.

Taking the time to trace that stack—from GUI to kernel—has made me a better troubleshooter, a better documenter, and ultimately a better infrastructure technician.

Proxmox is the dashboard—not the engine. The real horsepower comes from the seamless interplay between QEMU, KVM, and your chosen storage backend.